The bwUniCluster 3.0 provides the Baden-Württemberg scientific community with a modern high-performance computing system that supports a wide range of disciplines. The cluster complements the state-wide bwHPC infrastructure.

|

8.8 PFLOP/s Theoretical Peak Performance |

1094 Registered Users (Last change: May 2025) |

135 Active Users (Last change: May 2025) |

|

393 Nodes NDR200-Infiniband, HDR200-Infiniband |

127.15 TiB Main Memory |

Tier 3 HPC System |

|

27,632 CPU Cores Intel, AMD |

188 GPUs Nvidia, AMD |

bwUniCluster 2.0 Predecessor |

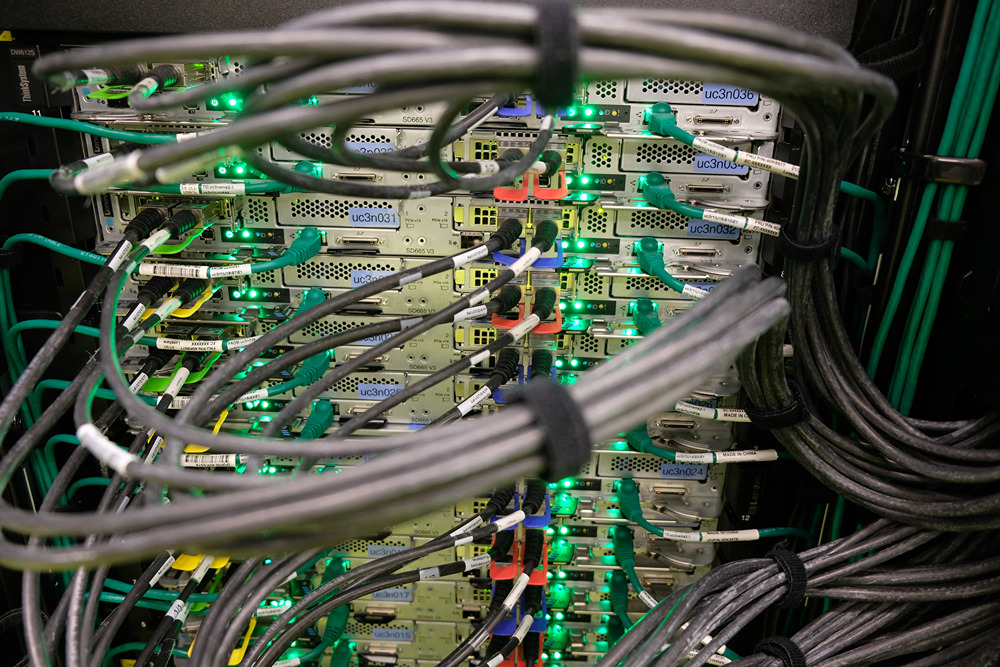

On April 7, 2025, the new parallel computing system “bwUniCluster 3.0+KIT-GFA-HPC 3” (also referred to as bwUniCluster 3.0) went into operation as a state service as part of the Baden-Württemberg implementation concept for high-performance computing (bwHPC).

The HPC system consists of more than 370 SMP nodes with 64-bit processors from AMD and Intel. It provides the universities in the state of Baden-Württemberg with basic computing power and can be used free of charge by employees of all universities in Baden-Württemberg.

The new bwUniCluster 3.0 system

bwUniCluster 3.0 is a massive parallel computer with a total of more than 370 nodes. Of these, around 90 nodes are accounted for by the new components operated at KIT Campus North, which can be operated in a more resource-efficient manner thanks to their hot water cooling and also contribute to heating the surrounding office buildings. Around 280 nodes originate from the expansion of bwUniCluster 2.0 from 2022 and will continue to be operated at KIT Campus South. Overall, the system is smaller than its direct predecessor, but the computing power increases due to significantly more powerful hardware, while at the same time reducing power consumption.

The basic operating system on each node is Red Hat Enterprise Linux (RHEL) 9.x. The management software for the cluster is KITE, a software environment developed at SCC for the operation of heterogeneous computing clusters.

As a global file system, the scalable, parallel Lustre file system is connected via a separate InfiniBand network. The use of several Lustre Object Storage Target (OST) servers and Meta Data Servers (MDS) ensures both high scalability and redundancy in the event of individual server failures.

Access and Registration

Each state university regulates the access authorization to this system for its employees itself. Users who already have access to bwUniCluster 2.0 automatically receive access to bwUniCluster 3.0. All they have to do is register for this service at bwidm.scc.kit.edu. Further details on registration and access to this national service can be found at wiki.bwhpc.de/e/BwUniCluster3.0.

Support

If you have any questions or require assistance, please do not hesitate to contact us via the bwSupportPortal.